Bio

I am an Associate Professor at the Machine Learning Department in Carnegie Mellon University. Prior to that, I was a Norbert Wiener Research Fellow jointly in the Applied Math department and IDSS at MIT. I received my PhD in the Computer Science Department at Princeton University under the advisement of Sanjeev Arora.

My research interests lie in the intersection of machine learning, statistics, and theoretical computer science, spanning topics like (probabilistic) generative models, algorithmic tools for learning and inference, representation and self-supervised learning, out-of-distribution generalization and applications of neural approaches to natural language processing and scientific domains. More broadly, the goal of my research is principled and mathematical understanding of statistical and algorithmic problems arising in modern machine learning paradigms.

I am the recipient of an NSF CAREER Award, an Amazon Research Award and a Google Research Award. I am also in part supported by several NSF and DoD awards, as well as an OpenAI Superalignment grant.

News & Events

Invited talk at University of College London (UCL), Gatsby Unit

Link for more info: https://www.ucl.ac.uk/life-sciences/gatsby/news-and-events

Invited talk at Columbia University Data Science Institute, Machine Learning and AI Seminar Series

Panelist and Mentor in Learning Theory Alliance workshop on Harnessing AI for Research, Learning, and Communicating

Participant in Aspen Meeting on Foundation Models

Guest on Delta Institute podcast

Link to recording: https://www.youtube.com/watch?v=6ux5yryGvFU

Academic Positions

Associate Professor

Carnegie Mellon University: Machine Learning Department

2025 - present

Assistant Professor

Carnegie Mellon University: Machine Learning Department

2019 - 2025

Norbert Wiener Fellow

MIT: IDSS and Applied Mathematics

2017 - 2019

Education

Ph.D. in Computer Science

Princeton University

Advised by Sanjeev Arora

2012 - 2017

B.Sc. in Computer Science

Princeton University

2008 - 2012

Service

Senior Area Chair

NeurIPS 2024

Area Chair

ICLR 2023, 2024

NeurIPS 2020, 2021, 2022, 2023

COLT 2022

UAI 2022

Program Committee

FOCS 2020, 2022

Awards

Google Research Award

2024Algorithmic Foundations for Generative AI: Inference, Distillation and Non-Autoregressive Generation

NSF CAREER Award

2023Theoretical Foundations of Modern Machine Learning Paradigms: Generative and Out-of-Distribution

OpenAI Superalignment Award

2023Research support for AI alignment work

Amazon Research Award

2022Causal + Deep Out-of-Distribution Learning

Research Areas

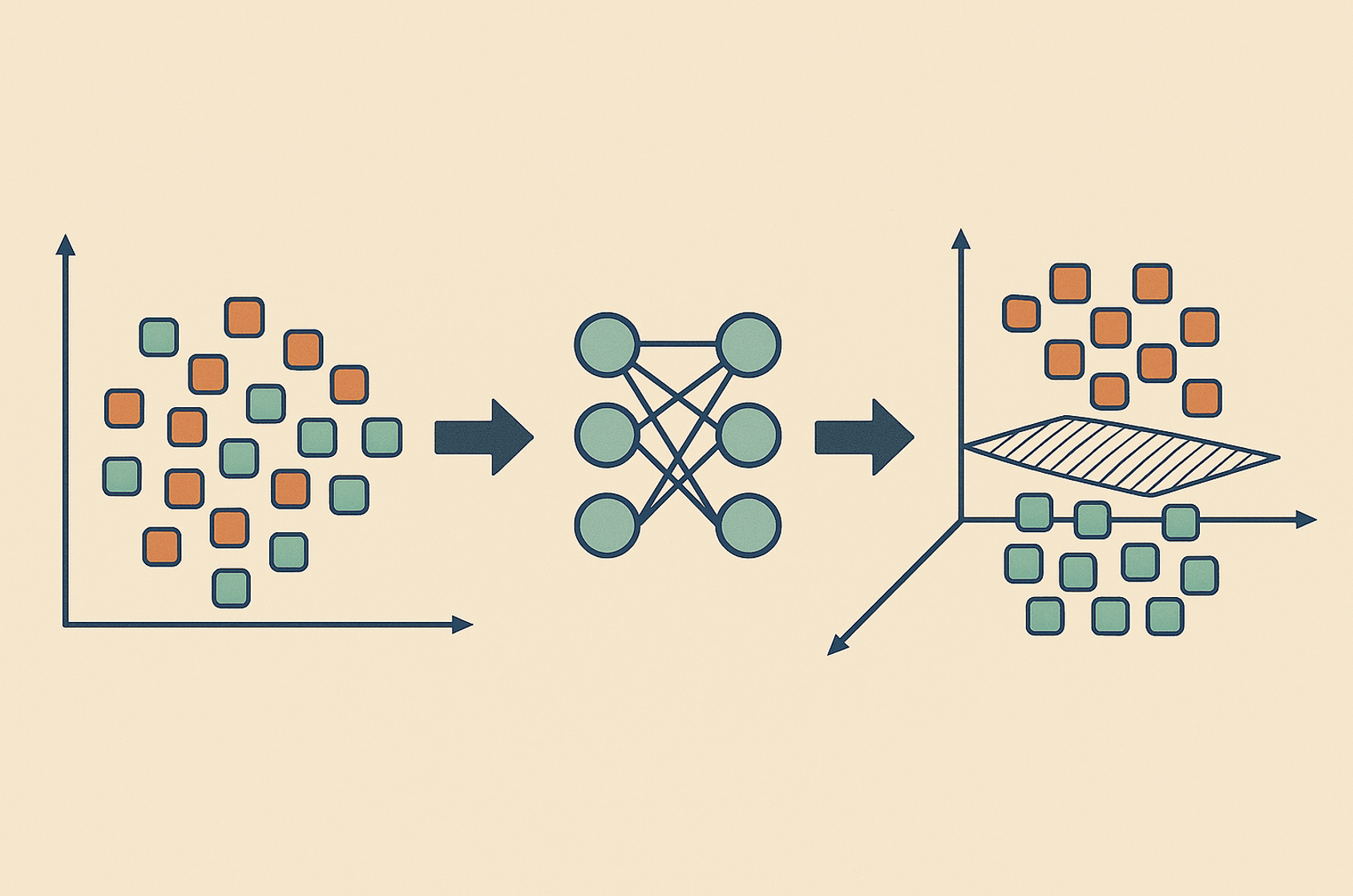

Generative Models

Algorithmic and statistical foundations of generative models, from GANs to diffusion models.

Representation learning

Methodological foundations for learning, understanding and interpreting representations.

Out-of-Distribution Generalization

Robustness and adaptation to benign and adversarial distribution shifts.

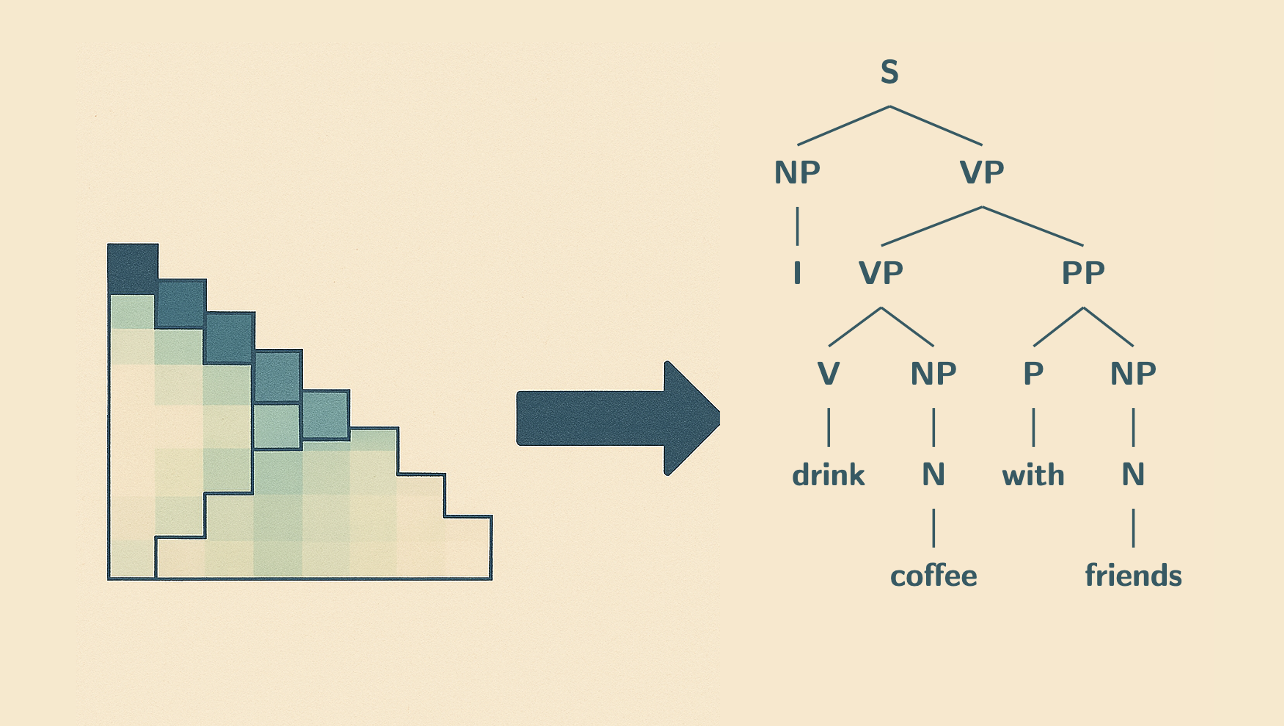

Neural Language Models

Theoretical and practical aspects of deep learning approaches to natural language processing.

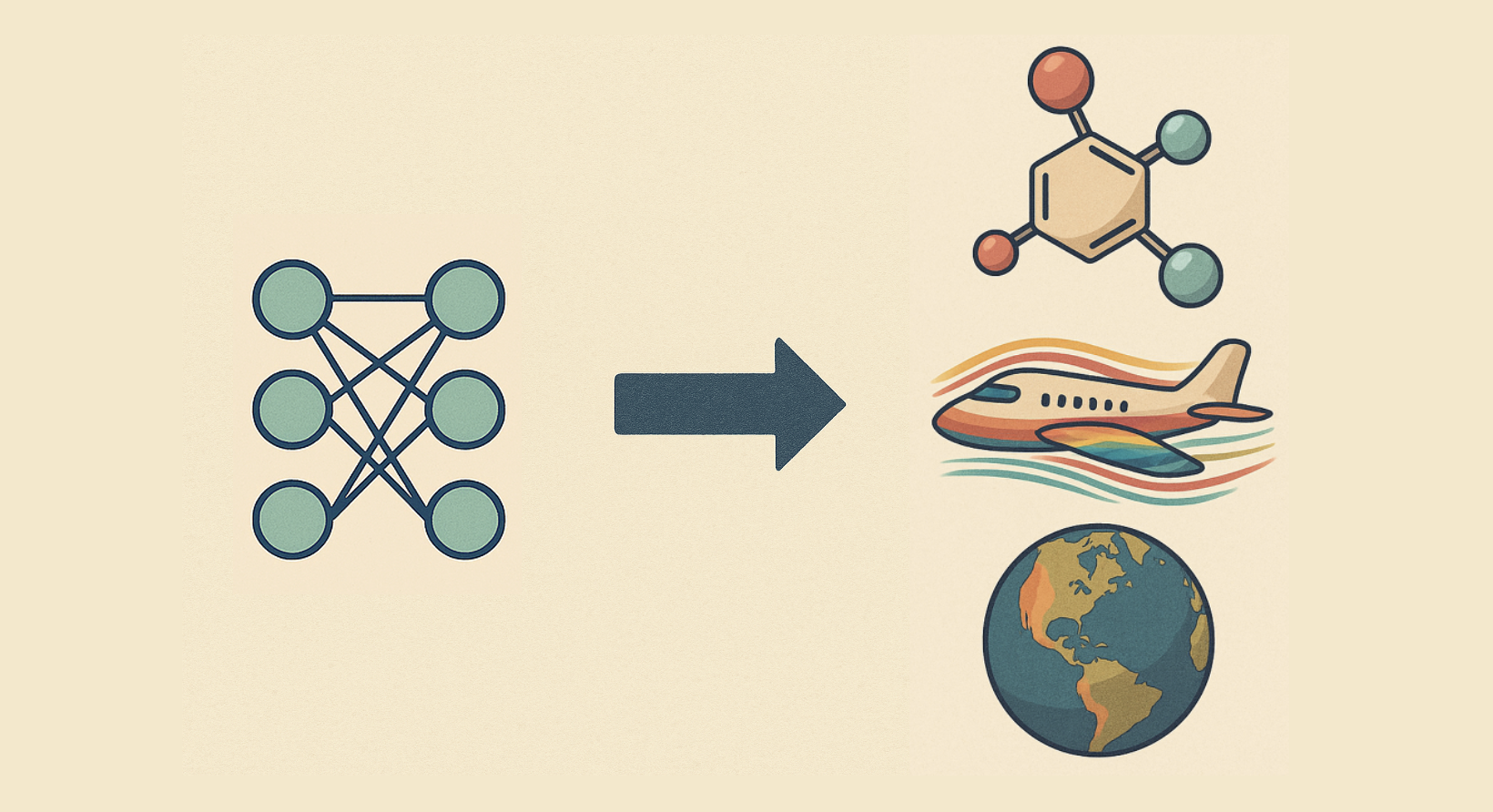

AI for Scientific Applications

Machine learning approaches for the sciences.

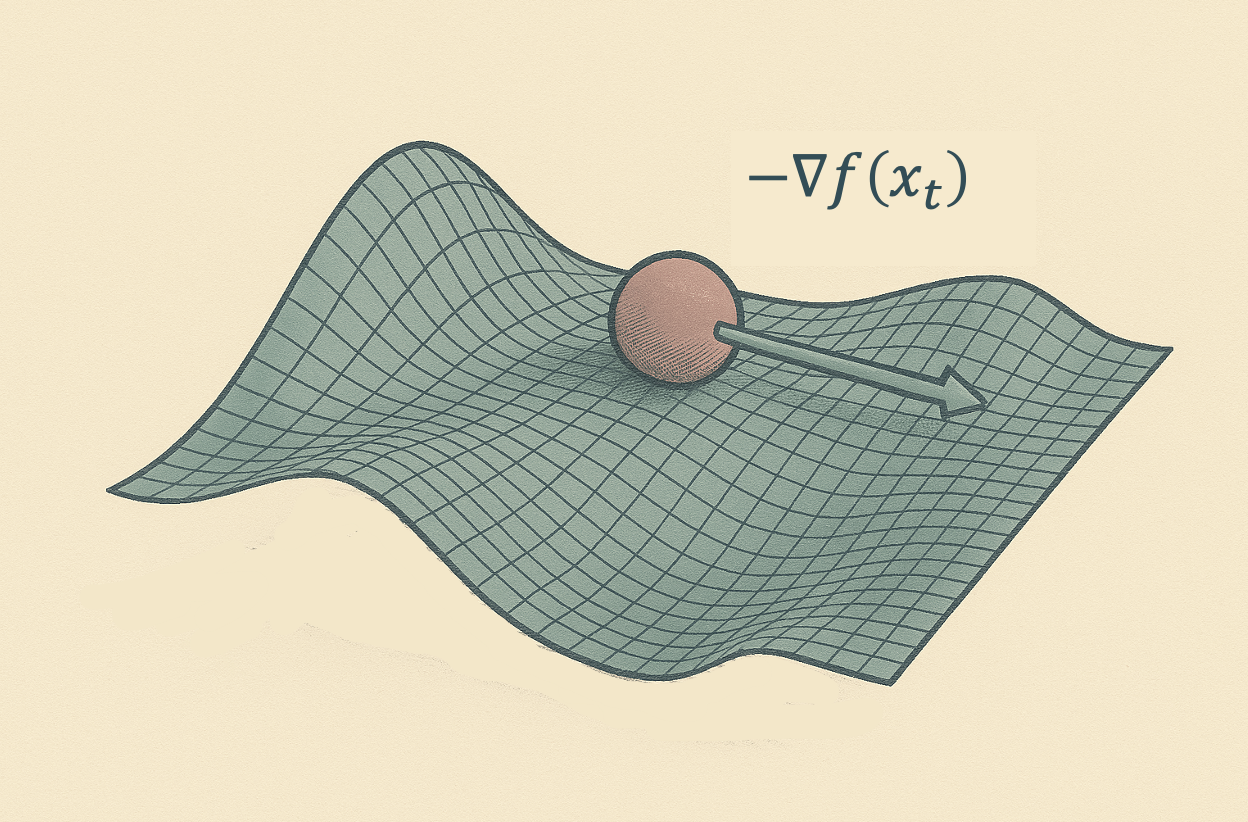

Sampling and Optimization

Algorithms and theory for computationally and statistically efficient sampling and optimization.

Publications

Curated selection of key publications across research areas:

With Dhruv Rohatgi, Abhishek Shetty, Donya Saless, Yuchen Li, Ankur Moitra and Dylan J. Foster

Manuscript

Kaiyue Wen, Yuchen Li and Bingbin Liu

NeurIPS 2023

With Frederic Koehler and Alexander Heckett

ICLR 2023, Oral Equivalent, Top 5% of Papers

With Tanya Marwah and Zachary C. Lipton

NeurIPS 2021, Spotlight

With Rong Ge and Holden Lee

NeurIPS 2018

With Sanjeev Arora and Yi Zhang

ICLR 2018

With Sanjeev Arora, Yuanzhi Li, Yingyu Liang and Tengyu Ma

Transactions of the Association for Computational Linguistics (TACL), 2016

Talks

Talks from the last 2 years (2023-2025):

In defense of (lots & lots of, diverse) theory: Lessons from history and philosophy of science

2026Simons Institute for the Theory of Computing, Moderns Paradigms in Generalization Reunion Workshop, 01/2026

Architectural Nuances and Benchmark Gaps in Scientific ML

2025IMSI Workshop on Statistical and Computational Challenges in Probabilistic Scientific Machine Learning, 06/2025

Architectural Choices in Scientific ML: A View Through the Lens of Theory

2025Columbia University Machine Learning and AI Seminar Series, 11/2025

Theoretical perspectives on modern machine learning paradigms: generative, scientific and out-of-distribution

2025UC Berkeley CS seminar, 03/2025

Toward Understanding and Mitigating Error Amplification in Long-Horizon Tasks

2025UCL Computational Neuroscience Unit External Speaker Series, 12/2025

The statistical cost of score-based losses

2024Simons Institute for the Theory of Computing, Moderns Paradigms in Generalization Boot Camp, 09/2024

Neural networks for PDEs: representational power and inductive biases

2024Workshop on Mathematics of Data, Institute for Mathematical Sciences at NUS, 01/2024

From algorithms to neural networks and back

2024ETH Seminar, 07/2024

The statistical cost of score-based losses

2024Oxford University, Computational Statistics and Machine Learning Seminar, 07/2024

Discernible patterns in trained Transformers: a view from simple linguistic sandboxes

2024Theory of Interpretable AI seminar, 11/2024

Neural networks for PDEs: representational power and inductive biases

2024CNLS Annual Conference on Physics-Informed Machine Learning, 10/2024

The statistical cost of score-based losses

2024Duke University Computer Science Department Colloquium, 11/2024

The statistical cost of score matching

2024Joint Mathematics Meetings, 01/2024

Neural networks for PDEs: representational power and inductive biases

2024Meeting in Mathematical Statistics, CIRM, 12/2024

The statistical cost of score-based losses

2024Newton Institute program 'Diffusion Models in Machine Learning: Foundations, generative models and non-convex optimisation', 07/2024

The statistical cost of score-based losses

2024SIAM Mathematics of Data Science (MDS), 10/2024

The statistical cost of score-based losses

2024University of College London (UCL), 07/2024

The statistical cost of score-based losses

2024Webinar for the Section on Statistical Learning and Data Science (SLDS) of the American Statistical Association (ASA), 05/2024

From algorithms to neural networks and back

2023Mathematics of Modern Machine Learning (M3L) Workshop at NeurIPS 2023, 12/2023 (talk starts at 22:15)

The statistical cost of score matching

2023IDSS Stochastics and Statistics Seminar, MIT, 02/2023

Fit like you sample: sample-efficient score matching from fast mixing diffusions

2023Allerton Conference, 09/2023

The statistical cost of score matching

2023Microsoft Research Redmond, 08/2023

The statistical cost of score matching

2023Yale FDS Workshop 'Theory and Practice of Foundation Models', 10/2023

The statistical cost of score matching

2023Meeting in Mathematical Statistics, CIRM, 12/2023

Students

Current Students

PhD in Machine Learning

PhD in Computer Science

PhD in Machine Learning

PhD in Machine Learning

Alumni

PhD in Machine Learning (2025)

Research Fellow at the Flatiron Institute and Polymathic AI

Visiting undergraduate student from Tsinghua University (Spring 2023)

PhD student at Stanford University

Masters in Machine Learning (MSML)

Masters in Machine Learning (MSML)

Masters in Machine Learning (MSML)

Apple

Masters in Machine Learning (MSML)

PhD student at University of Washington